Artificial Intelligence and Formal Verification Part 1: Introduction

Part 1 of who knows how many.

This essay is aimed at CS practitioners (such as working programmers) and the explanation is going to be fairly technical at times. That means some of my readers may not find the content of this series particularly interesting or digestible. Don’t despair; most of my posts are not going to be deep technical dives. If this topic isn’t your thing, hit delete and move on; I’ll be posting about other things soon enough.

If, on the other hand, you’ve got an interest in software engineering or AI, or have ever been curious about how software might be made radically more reliable and trustworthy, this may very well be right up your alley.

Preface

One theme I’m probably going to discuss a lot on this blog: Artificial intelligence is going to transform almost everything in our civilization, and generally for the better.

There are a vast number of applications for AI, from finding new antibiotics to automated herbicide-free weeding. The technology often has surprising uses (see, for example, image generation, which no one in the 1960s or even the 1990s anticipated1) and we have no idea what most of the applications for the technology are going to be.

However, I’m a computer scientist2, and better ways to write programs are something I care about, and so I’ve been pondering the use of AI in software engineering.

One such application, which most people in CS aren’t thinking about yet, is automating the formal verification of software, which is to say, mathematically proving that programs are correct. The topic is a bit obscure even by CS standards — most computer scientists don’t really know what formal verification is, or have only vaguely heard of it but have never touched it — and so the idea may be new to you even if you have been programming for decades.

So, this is the first in a series intended to explain what formal verification means, why more widespread use would be transformative to software engineering, and how one might go about using specialized machine learning systems that aren’t much like AGIs3 to solve the problem.

I intend to explain this in enough depth that a computer scientist without a background in the topic (i.e. most computer scientists) can follow what I mean, and unfortunately that is going to take more space than I like.

I‘ll be posting this in several parts, and trying to make each of them stand on its own so that even if you don’t follow one part, the rest still make sense, and you don‘t have to swallow an elephant to understand the overall idea.

What’s the Point of Formal Verification?

Everyone who has ever programmed a computer (and everyone who has ever used a program) is aware of the fact that software has bugs. Sometimes those bugs are just annoying, sometimes they cause you to lose work, sometimes they open up your servers to attack over the internet and you discover that your company’s confidential customer data is being auctioned off online.

For a long time it felt like bugs were simply a part of the price we paid for having software at all, and there wasn’t much to be done about it. Academic researchers did a lot of thinking,4 people wrote books,5 but mostly everyone fumbled about in the dark.

However, that’s changed over the decades, at first slowly, and now much faster.

“Software Engineering” was once more an aspiration than an actual discipline. When I started programming,6 even quite basic tools like source code control systems were not widely available. Today, there are well developed engineering practices in use among professional programming teams that have enabled far more ambitious projects to be executed than people used to believe possible.7

Testing has gone from ad hoc and primitive to a systematic discipline with well understood best practices, and good teams now try to fully automate their test runs so they can be executed every time a change is made. Code is now carefully tracked by “forge” systems that not only provide version control but allow team members to watch and review each other’s work, track bugs and feature requests, and document their systems.8 Continuous test, integration, and deployment have also completely changed the way people think about how they develop systems. All of these (and more) have made a real impact on the complexity of systems people can successfully deliver and the quality of those systems. “Software Engineering” is no longer an aspiration, it’s now a reality.9

Let’s now turn to formal verification, and how it could fit into the business of software engineering. As I’ve already mentioned, formal verification is the process of creating a mathematical proof that a program does what the programmer intended it to do.

Most programmers know about testing of course and why you want it. Without testing, you are just hoping that your software works. If you’re deploying something that millions of people use, you want knowledge, not just hope.

A good test gives you actual evidence that a program meets some specification, by checking if it behaves as intended on some finite set of cases. Test thoroughly enough, and you get quite a lot of evidence that what you’re releasing actually works, and that the rocket will not blow up on the pad, the word processor will not eat the user’s text, the new trading system will not lose millions of dollars a minute.

Unfortunately, any test is, by its very nature, limited. Tests can be quite extensive, but they cannot cover all possible cases.

Formal verification is like testing in that it exists to give evidence that a program meets a specification, but it improves on testing by replacing partial evidence with a mathematical proof. By their very nature, proofs cover all possible cases. One may be proving the wrong thing (and I’ll talk quite a bit about that problem later), but you get excellent assurance that the property you proved indeed holds, and if you asked the right question, the system will behave as you expected, at least provided that the rest of the system also works as expected.10

Formal verification was, until the last twenty years, mostly a laboratory curiosity, and had never been used on a substantial piece of real-world code. More recently, however, breakthroughs in both the techniques being used and the usability of verification tools have led to the formal verification of real world software in use by billions of people.

For example, there now exist formally verified compilers like CompCert and CakeML, formally verified microkernel operating systems like seL4 and CertiKOS, a bunch of formally verified cryptographic code from Project Everest and others (which you’re probably using to read this very blog post), and even formally verified hardware designs (including several formally verified RISC V processors).11

Unfortunately, such formal verification still requires incredible amounts of human expertise and labor, and so only a very limited number of artifacts have been verified.

I think this is an area which is ripe for a revolution, in part because of artificial intelligence. AI may serve to drive the area from two directions.

First, as more and more software gets written by AIs, the human engineers working on such systems will need to be able to trust that the software does what the systems writing it say that it does. Formal verification is an excellent way to do that. Given a checkable formal proof, you do not need to understand the details of a piece of code nearly so well; you can treat it like a black box that follows the specification.

Second, AI systems may be able to do much or even all of the tedious part of verification, and at an efficiency and scale that was previously unthinkable. Instead of taking hours of a very senior staff member to verify some tricky function, it may be possible for even quite junior engineers to do it in moments. This means we’ll be able to do vastly more verification and at an affordable cost.

Let’s now turn to what verification actually means at a high level. (The next installment in this series will give a fully worked example.)

What, Exactly, Does Verification Consist Of?

There are a lot of ways you can know that something is true. For example, you can have witnessed something personally, or you may have tried doing something repeatedly and have always gotten the same results every time. These provide a sort of statistical knowledge of a belief.

Mathematical proof is a different beast. It‘s a way of showing, rigorously, that subject to certain assumptions, a particular proposition is known to always be true.12

Most programmers are familiar with the notion of mathematical proof, though perhaps they haven‘t done many proofs since their high school geometry class, where they learned things like how to demonstrate, with rigorous reasoning, that the square of the length of the hypotenuse of a right triangle is absolutely positively always equal to the sum of the squares of the lengths of the two other sides.13

In high school geometry, the assumptions made explicit by stating a small number of axioms that we start with, like “if equals be added to equals, the wholes are equal,” and “through a given point P not on a line L, there is one and only one line in the plane of P and L which does not meet L.”14 From those simple (and hopefully mostly “self evident”15) assumptions, one can derive propositions like the Pythagorean Theorem in such a way as to leave little or no doubt as to the correctness of the result.

The proofs people usually encounter in math classes are informal, that is, written on paper, written mostly in natural human language, and written on the premise that human beings will read them, work through the steps in their heads, and will be able to figure out whether or not the argument makes sense. Unfortunately, it turns out that incorrect arguments are often widely believed; it‘s straightforward to find lists of mathematical “proofs” that were later disproven.16 Doing mathematical proofs in natural language turns out to be harder than you would think.

However, it‘s possible to avoid the problem of reasoning in English by using a rigorous language, not unlike a programming language, for one’s proofs. Such a language is known as a “formal system”.17

In such a system, conceptually, a proof is expressed in a machine parsable language (which, as we will see shortly, is sometimes quite literally a programming language) and can itself be checked by a computer program.1819

I will not describe the full history of such systems; it’s long and this essay is more than long enough already.

However, I will mention one of the more interesting twists in the history of modern logic, the discovery of the so-called Curry-Howard Isomorphism, a deep connection between computer programs and mathematical proofs. Suffice it to say, it turns out that, if you construct a programming language correctly, types become indistinguishable from logical propositions, and functions become indistinguishable from proofs. A function of a given type is a proof of the proposition corresponding to that type.20 This allows you to do programming and proofs in the same language, which turns out to be very powerful.

Several modern proof assistant systems are languages of this style;21 for demonstration purposes in the next installment I’m going to use Coq,22 but there are other popular ones like Lean and F*.23

I’ve already written far, far too much here as introductory material. In the next part of this series, I’m going to show off a very simple insertion sorting routine, and its proof in Coq. I will warn in advance that it’s going to be a tedious slog, though there’s an extent to which that’s the point — it’s precisely because it’s a tedious slog that there’s strong motivation to find ways to make the work less tedious.

Except perhaps Robert Heinlein, who describes an AI generating video from scratch in “The Moon is a Harsh Mistress.”

At least, in so far as I’m an anything.

For those that don’t know, AGI means “Artificial General Intelligence”, meaning an AI capable of roughly matching or exceeding human performance in every skill. Such a system could obviously do proofs because humans can do proofs, but I see specialist proof systems as being potentially superior. As I hope to explain in another essay, AGI is one of two transformative technologies our civilization is about to develop, the other being molecular manufacturing.

Most of it was fruitless, but that’s just the nature of research — you have to try a lot of stuff that doesn’t work before you find some things that do.

I used to be quite fond of Fred Brooks’ The Mythical Man Month but I think it’s been superseded by history; that’s probably a long discussion in itself. These days I often recommend Feathers’ Working Effectively with Legacy Code, which is a very different sort of book, so perhaps the comparison isn’t fair.

I began for real around 1979. I still have fond memories of the PDP-8e at my high school, and of the sounds of an ASR-33 galloping along at a blistering ten characters per second (upper case only!), but I’m much happier working on a machine with 64GB of RAM, millions of times the performance, and good tools.

When Ronald Reagan proposed the Strategic Defense Initiative, one actual claim made by computer scientists arguing against it was that we simply didn’t know how to build the necessary software, because it would have been millions of lines of code. These days, that sort of thing is routine.

“Forge” platforms like GitHub and GitLab have transformed the software life cycle and even the way that many users interact with software, as has the open source movement. The fact that you can watch software being built and contribute pull requests at will even if you’re not part of a project is completely different from the way things were when I was a kid. Fred Brooks wrote a lot about communications bottlenecks as a key problem in software development, and how he didn’t see how tooling could fix that. GitHub and the like have proved that tooling can, in fact, fix a great deal.

Sometime soon I might write a bit about modern software engineering, because I still encounter a lot of managers (and organizations) who haven’t yet caught up with modern development practices and why we now do things quite differently now than we did thirty years ago, but if I do that, it’s going to be another day.

There’s some serious weaseling there, of course. Your program might do the right thing, but does the compiler? Does the library it links with? Does the operating system? Is the hardware perhaps buggy, or even if it was designed correctly, maybe this one CPU has an intermittent electrical fault? I will talk quite a bit about all that later.

It‘s been routine for a long time now (decades, in fact) to formally verify big and tricky chunks of microprocessor designs like the floating point units; what’s new is that we now have designs for complete CPUs.

Note that I‘m again using some weasel words here, like “subject to certain assumptions.” I promise we’ll discuss those in later installments.

And yet again, that’s subject to certain assumptions, like that we’re dealing with ideal right triangles in a flat Euclidean space. If you’re doing surveying of large enough triangles on the surface of the earth, which is curved, the assumptions break down. I’ll repeat, monotonously, that we’ll discuss that a lot in an upcoming installment.

Euclid gives us five axioms and five “common notions” which are much the same thing, but almost from the start of Elements begins using assumptions that aren’t actually in his list. David Hilbert showed that to be really rigorous about geometry you need a list of twenty axioms. A side note about Euclidean geometry: you can show it’s consistent and complete, which is one of the reasons it’s such a good toy domain for high school students learning what proofs are. You’ll never state a proposition that can’t be either proven or disproven.

Again, I’m using weasel words.

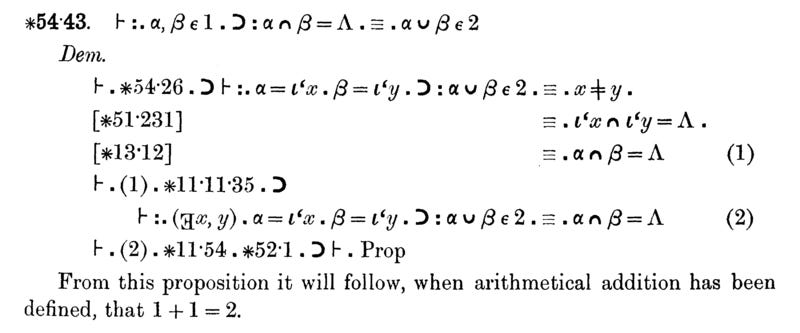

The first attempts at doing this, like Russell and Whitehead's famous “Principia Mathematica”, predate computers, and their work had to be hand checked.

The astute reader might be wondering at this point “What about Gödel’s Incompleteness Theorem? Doesn’t that ruin everything?”

Gödel showed that one could never rigorously prove a formal system to be consistent using its own rules of inference, but in practice, this has proved to be of little or no practical hindrance to either professional mathematicians or to people working on formal verification systems.

One does have to be careful in constructing such systems, but it’s pretty unheard of these days to find real inconsistencies in an underlying logic. (Yes, there are some exceptions; early versions of Martin-Löf Type Theory, which is a cousin of the logic you find in systems like Coq, suffered from Girard's paradox. This may already sound like technobabble from a science fiction film, and is probably far more detail than you probably want.)

Verification systems are often very large and complicated. How can you trust they are correct when Gödel says you can’t actually prove a system correct in itself? The trick that is usually used is to make sure that the “proof checker” at the heart of them is only a few hundred lines long, and can be manually inspected for correctness. The “proof checker” is thus the only part of the system that has to be trusted, and since it’s small, there’s more chance that it’s actually correct even though it can’t as such be proven by itself.

For those that care, which may be no one: the isomorphism is more than just a convenient sort of pun, it’s very deep. There’s a real sense in which typed lambda calculi and intuitionistic logics are the same thing. Indeed, Alonzo Church originally intended the untyped lambda calculus to be a logic, but it turned out to be inconsistent. (He then found it made a good model of computation.) The strongly normalizing version of the simply typed lambda calculus (that is, the version where all functions terminate because you don’t have unbounded recursion) is consistent, and roughly corresponds to intuitionistic propositional logic.

Some older ones, like ACL2, aren’t.

I picked Coq because I needed to pick something and I happen to know Coq better than the others. That said, Coq is a reasonably popular choice as these things go. It was created a bit over thirty years ago at INRIA, and as with all such systems, it’s a combination programming language, logic, and proof assistant.

To get technical again, such systems are dependently typed lambda calculi under the covers, typically the predicative Calculus of Inductive Constructions. Explaining what that means, or even things like “dependent types”, is way, way beyond the scope of this essay.